- cross-posted to:

- amd

- cross-posted to:

- amd

Definitely not as bad as Bulldozer was, not even close.

Starting to really dislike this 100% or 0% mentality, even if it’s just being used as the vehicle to carry the article. It’s what’s so wrong with the world today. It’s a gross oversimplification of reality.

That aside, I agree with the premise. With RDNA3 AMD established that it wasn’t willing to compete on price, 4000-series pricing left the door wide open for AMD to undercut and wholly outsell NVIDIA hardware by offering better price/performance value. But AMD choose to not take advantage of it, and oddly instead copied NVIDIA’s upselling strategy with its own pricing choices.

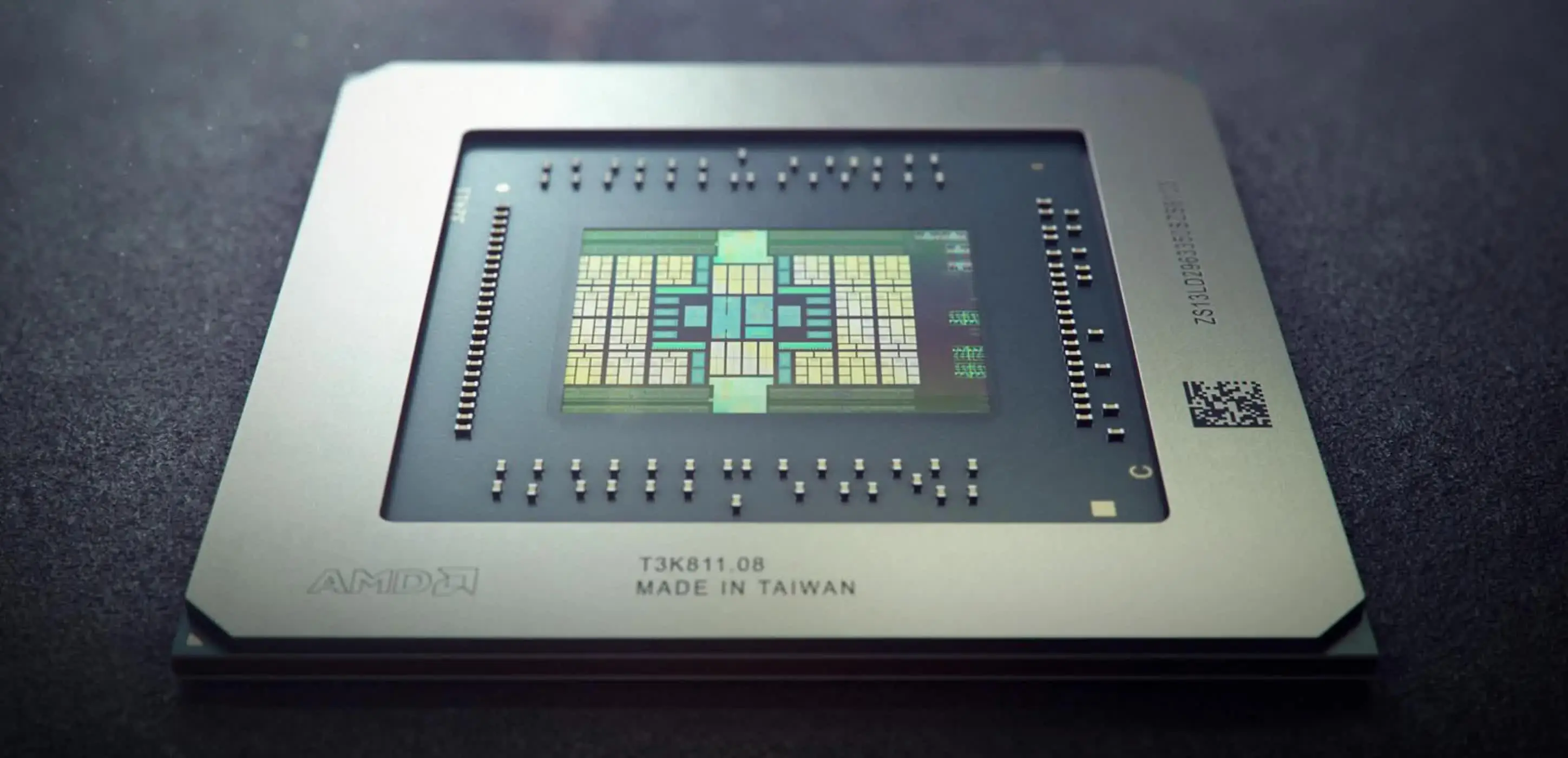

Strangely AMD made that decision despite realizing cost-savings from migrating to a chiplet design. If AMD is no longer willing (or able) to compete on best value, then as the article implies AMD needs to start competing for the performance crown again. AMD needs to at least pick a strategy and do something, because it simply doesn’t have any spare graphics marketshare left to waste.

From what I heard they don’t want high volume low margin gpus to take up fab capacity when they could instead use that for cpus

Then they should have made a fatter GCD 250mm^2 vs 150mm^2 with 140 or something CU’s, and added 3d stacked cache to feed it on the MCDs. They might have even had a plan to but flaked out. Beat 4090 on raster, and compete with 4080 on RT. I was hoping RDNA4 would do just that, but the rumors of AMD only doing mainstream for RDNA4 seems the opposite.

I’ve heard that, and it’s plausible. But keep in mind the MCD’s are made on 6nm which AMD isn’t using for CPUs. Also AMD is fabricating some APUs and EPYCs on TSMC 4nm already.

If AMD is suffering from limited wafer supply at 5nm then it absolutely has to adopt a lower volume, higher performance crown targeting approach to its GPU designs. Regardless of whatever the supply is today, wafer supply in the future is not going to be improving as everyone and everything funnels into the same future TSMC node groups.

I keep asking who is it that believes AMD have the most superior talent such that they are destined to blow out all competition over time?

RDNA2 was AMD’s zen3 moment when the stars aligned, but so was Ampere for Nvidia

RDNA2 was AMD’s zen3 moment when the stars aligned, but so was Ampere for Nvidia

I mean, that’s because Turing failed to impress in everything except the 2080 Ti.

Pascal was just too good, it’ll never happen again. Hence: Ada generation being so meh except the 4090.

Turing gen on gen is similar to RDNA3 and is exactly my point. AMD engineers are great, but don’t expect them to blow every market they enter. Just like how XCLIPSE GPU showed that Qualcomm is competitive in mobile GPUs

More like “New Phenom II”, not bad but also not good

Yeah, that seems like a good comparison

Given that RDNA is in both major consoles and the steam deck it’s obviously a success for the company. Thou shalt not judge a GPU architecture on the steam survey alone.

Bulldozer was also in major consoles lol.

That was it’s baby brother Jaguar.

That was actually jaguar. Not that it was much better

Jaguar actually had better IPC than Bulldozer which was important at the low clocks the 8th gen ran at, and gave more of their power budget to the GPUs

It’s underwhelming compared to what Nvidia is pulling off with RT and DLSS. But they have an ironclad contract with Xbox and PlayStation.

Granted I see PlayStation switching some AMD stuff out for bespoke solutions in the future, Mark Cerny has been patenting a lot of stuff and Sony generally aren’t afraid to try some of their own hardware in a machine.

Specifically ray tracing, I recall Mark Cerny patenting some RT tech that a load of articles said would improve performance on PS5, but it featured hardware that just wasn’t in the PS5 lol.

What a dumbass title.

Another bulldozer? Bro, rdna2 in particular took the fight to Nvidia on both perf and price. The 6800xt was on par with the 3080 for less money. My 6700xt cost me $360 and is almost as fast as a 3070, while having 12gb of vram.

Also, the Radeon groups budget is probably less than what Nvidia spends on a big corporate lunch. The products those people put out on a shoestring budget is honestly nothing short of miraculous.

Rdna3 disappointed me a little bit, but when priced correctly it’s not bad. The 7800xt at 450 or less is honestly a great buy. And if the 7700xt drops to 350-400, which it will, it’s a solid buy.

The 7600 is slowly inching towards 200…fantastic 1080p card.

We’ll have to see what rdna4 ends up delivering, but so far the products themselves are very good, as long as they’re priced appropriately.

It didn’t bankrupt the company, nor did it revolutionize the GPU industry, so… somewhere in the middle?

Zendozer

Underrated factor of Bulldozer’s downfall was GlobalFoundries 32nm node being a major disappointment. TSMC is a far cry ftom GF.

I mean… AMD spun off their fabs in 2008/2009, which eventually became glofo. To say glofo 32nm was bad in 2011 is really to say AMD 32nm was bad in 2011 because much of the 32nm research and development would have been locked in from when AMD was still running the fabs. I imagine they’d have been far worse, even, without the capital injections from Mubadala.

Didn’t help the APUs on 28nm.

Bulldozer was a poor architecture choice even if it had a great node to work off. High clocks and poor IPC was not a design for the future, plus the shared FPU meant that for floating point it was like you had half the cores, they had bet all that work would move to the GPU way too early and CPU performance needs obviously never went away.

Per MLID’s recent podcast, AMD is actually ‘proud’ of their GPU division. They’re punching well above their weight, considering their R&D budget is (probably) not even a quarter of Nvidia’s.

It’s pretty impressive when you think about it.

Their ‘secondary’ GPU division is the reason AMD has the lion’s share of the console market, the sole exception being the Switch. Though that might change in the future, now that Intel is finally serious about GPUs.

I still don’t understand why they ditched Larabee way back when.

In any case, I personally don’t think Su is interested in graphics or ML, or at least she doesn’t sound too enthusiastic to me whenever she discusses Radeon. Her sole focus is - apparently - consumer CPUs and data centers.

Plus, I think Raja’s departure was a huge blow to Radeon, which ‘might’ explain why RDNA3 is so lackluster with absolute mediocre RT performance.

Per MLID’s recent podcast, AMD is actually ‘proud’ of their GPU division. They’re punching well above their weight, considering their R&D budget is (probably) not even a quarter of Nvidia’s.

Compared to AMD, Intel blew their budget on XeSS features no one asked for when their customers can just be using FSR, launched their top card first instead of their budget cards to have future Intel cards be associated with negative branding, and burned bridges with AIBs by not launching on time. That on top of losing $3.5 billion in value in the AXG group.

Intel should have launched their Arc 3 and mobile GPU cards their first year and after optimizing their drivers, start claiming their performance claims against an RTX 3070 when their transistor density is already exceeding a 6950XT with their A750 card.

Why in the world would Intel’s plan for upscaling to be “nothing, let AMD take the lead”. The cost of entering this market was well known. “We lost money spending years developing a GPU generation” was a surprise to noone.

Arc’s primary goal is to deliver great iGPU performance in the mobile market, which has a 2x TAM vs desktop dGPU.

Yeah Raja didn’t do so hot for Intel, so I doubt the Radeon team suffered much with the loss…

Correct me if I’m wrong, but I believe Raja is a hardware guy. The issues Arc is facing are mostly software/API related. The hardware is sound.

Besides, Arc has far superior RT performance than Radeons, which is a pretty good indicator of what’s under the hood.

Raja oversaw the launch of an entirely new product into an existing market segment. And they did it during the toughest time out there. A pandemic with poor economic outcome. It wasn’t that the market didn’t take to the product. Just people are skittish about opening up their wallets today.

Intel essentially was one year too late and a year too early with their launch. If they had timed it earlier it would have been a home run during the pandemic fueled pc purchasing.

Arc launched with Ray Tracing, XeSS up scaling, and good performance per value. Their driver team is still working hard and launching home runs with each delivery. They only lack a high end GPU capable of 400 watt and frame generation technology.

Which to be absolutely fair to anybody, they aren’t expected to get right on the first product launch. NVIDIA is like on their 20 plus generation to this thing called a discrete graphics unit.

And they did it during the toughest time out there. A pandemic with poor economic outcome.

And a war that cause the relocation of the team responsible for the Arc drivers (in Russia) due to sanctions.

Can you elaborate more on this? This is the first time hearing about relocation for the driver team?

Their R&D budget is probably not a quarter of Nvidia’s and their profits probably aren’t even 1/10th, not sure how that’s a good ratio. Clown take from MLID (like always).

7900 xtx is looking very reasonably priced, right?

It looks very reasonably priced compared to the 4080. In the vacuum it’s overpriced compared to the improvements from 6800XT/6900XT.

I think they’re both fine at their sale prices, but are a hard sell at their MSRP. The XTX on sale for $800 seems like a good value and the 4080 at $1000 isn’t unreasonable either.

Bulldozer was the exact opposite in terms of how the units behaved. Currently, it’s nvidia with the ‘make moar corez’ meme, and AMD could have been in the reckoning for the top spot in raster with a chip that has like a third of the shaders of nvidia’s.

AMD’s failing this gen is again not improving the clockspeeds. And of course, not making a chip the size of nvidia’s flagchip.

As for RT, I don’t think the difference is that big hardware-wise. AMD are overperforming in raster and nvidia have sort of a software monopoly right now with the PT game being done with their collaboration.

If AMD not making a chip that is on par or bigger than nvidia’s flagship then the whole MCM design becomes meaningless. They are getting penalty from PPA and power effiency due to the interconnection between chips. What I expected is that AMD pushes the performance of their flagship to the further level, but what I see is that they are betting on higher frequency with a much smaller shader array (very ATI-style) while using MCM to save cost. Fortunately they failed, but they will never learn.

Nah, rumors of RDNA 4 were 3.5ghz on a modest spec bump (someone insame also mentioned a near doubling in spec and also 3.5ghz but no one should take that seriously), which of course was cancelled and now RDNA 5 will end up near 4ghz with a moderate spec bump

Did your source ever mention there is probably a dual-chip design of RDNA4? Some people hinted that for me after the rumor which said high-end RDNA4 chips got canceled came out.

Yeah, that was the hope when the dual-issue shaders were being rumored as 12288 shaders on Navi31, almost 2.5x of 6900XT. Combined with arch improvements, higher clocks and 2nd gen RT, it would have easily done 2.5x of 6900XT performance.

RDNA3 dual issue is so much more useless than Nvidia, they never talk about them, but still use them to talk about peak compute throughput.

Notice how 7900XTX is significantly more ‘cores’ and more TFLOPs than 4080 but is 5% better at best

The problem is that where they are falling isn’t a massive issue today but will be in 5-10 years.

This situation with AMD tech (FSR) being just basically the nvidia equivalent but worse and their refusal to incorporate any hardware into the equation to keep up is going to cause a massive vacuum in the value proposition. (It already is to a certain extent)

This is a good point. Some FSR acceleration on either AMD cards or processors, while retaining it’s ability to be used on different hardware could help bridge the gap, if it’s possible.

AMD has been playing catch up for very long. They finally got an edge on intel gaming with the X3D chip lineup. I wonder if the same tech would benefit their graphics cards.

Some FSR acceleration on either AMD cards or processors, while retaining it’s ability to be used on different hardware could help bridge the gap, if it’s possible.

This is pretty much how intel’s XeSS works, it will look best on an ARC gpu but will work with other brands albeit with lower quality

Honestly I think it should become a lot better in 5 to 10 years, since a lot of their problems seem to come from being understaffed. Keep in mind, they were a fairly small company on the verge of bankruptcy just a few years ago, and you can only onboard so many people each year without causing inefficiency.

So their competence in software as well as hardware should be improving, not getting worse.

Only if they do a complete 180 in philosophy as a course correction, which I just don’t see being the case; They’d have to admit that nvidia (and intel) are correct and their way was a mistake.

AMD GPU division is usually more than willing to double down on their mistakes when it comes to vision/philosophy over admitting that they made a mistake.